|

|

Location: GUIs >

Misc GUIs >

What made the PC, Mac, and Linux so great (or not) Volumes have been written about the computer industry and its various successes and failures. The following is my personal recollection and ramblings of some of the turning points in personal computing history. What made Personal Computers great The story of the "Personal Computer" started before the IBM PC. The earliest personal computers included machines such as the Altair, TRS-80, Commodore PET, and the Apple II. Prior to the personal computing revolution, you would perform your computing tasks on a huge mainframe or multi-user minicomputer. This computer neither belonged to you nor was under your control. Computing time often cost money, and your tasks were subject to the whims of those in control. Only big companies owned these systems and it was often considered infeasible for an individual to own or care for a "computer". As computing hardware got smaller, it became apparent people could indeed own a microcomputer of one form or another. Once a person no longer had to pay per CPU cycle, they could engage in a wider variety of computing tasks. Such as experimenting with figures in a spreadsheet, word processing, playing games, or communicating with other microcomputers. Some of these tasks made personal computing extremely desirable. The term "personal computer" could be applied to any single-user microcomputer, but in practice a truly "personal" computer had certain characteristics.. A personal computer is:

S-100 bus systems like the Altair were all about expandability and configurability. In fact, a base system was often just an expansion bus with a front panel. You could chose from an incredibly diverse variety of CPU cards, memory cards, and I/O cards or build your own. Later machines, such as the Apple II provided you with a standard baseline CPU and video, but you could still add a huge variety of custom hardware. Systems that tie all add ons or upgrades to a single vendor generally have extremely limited expandability, and increases the risk of lock-in. A personal computer must be user programmable. If it can only do things

as defined by the manufacturer, then it is just an appliance. Furthermore,

a user must be able to write and distribute programs freely. Some computer

vendors required licensing fees from anyone who wished to distribute software

for their platform.

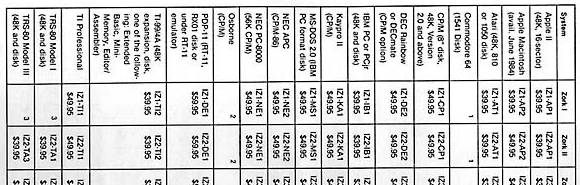

In the early days, there were many computer manufacturers. Each with a different, incompatible, platform. Even different models from the same vendor were often incompatible. This meant that if you wanted to buy a specific piece of software, you had to check that the software was available for your specific platform.

The Apple II The Apple II series provided a relatively inexpensive complete computer system marketed towards home users. Many other home oriented computers of the time were only expandable using cumbersome external expansion boxes, or required extensive disassembly. The Apple II, however, included eight easily accessible internal expansion slots. The Apple II had a huge following. There were piles upon piles of software programs available and plenty of third party expansion hardware. Common Apple II software was the usual hodge-podge of user interface inconsistency. But it is interesting to note that much of that software represents the early exploration and creativity in to what was even possible. Apple was fairly open about the architecture with programmers and developers, and they did a fair job of supporting backwards compatibility throughout the different models of the Apple II series. There were numerous attempts at cloning the Apple II, and Apple was fairly hostile towards them. Some early ones required unauthorized copies of the Apple ROMs, and didn't get very far. The Franklin was a "clean room" reimplementation, but suffered because Apple exclusively controlled AppleSoft DOS, ProDOS, and some popular applications, so compatibility was always in doubt. The much later Vtech Laser 128 was very popular and considered fairly compatible, but by that time it seemed like Apple just didn't care about Apple II clones much any more. The IBM PC When the IBM PC first came out, it was just another proprietary microcomputer among hundreds of others. But several incredibly fortunate things occurred:

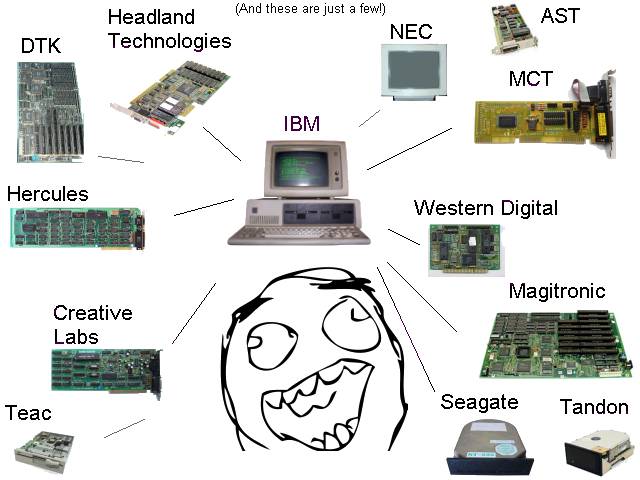

The modular design of the IBM PC enabled the use of faster CPUs and additional devices with minimal compatibility issues. In contrast, upgraded models of other microcomputers often completely changed their architecture. From the business perspective, fully supported clones meant less business risk. You could invest in DOS software and not have to worry as much that IBM would pull the carpet out from under them. You could chose to invest in pure IBM products and their support, with the knowledge that you could fall back on less expensive third party clones if needed. Or you could save some money by purchasing clones, and still interoperate with other businesses using IBM. From the enthusiast and home user's perspective, you could obtain an inexpensive IBM PC clone and use the same software as your office. Furthermore, you did not have to go back to IBM for upgrades. A wide variety of third parties were creating I/O cards, video cards, hard drives, and more. Thanks to all of the competition, often third parties provided superior products to those provided by IBM itself.

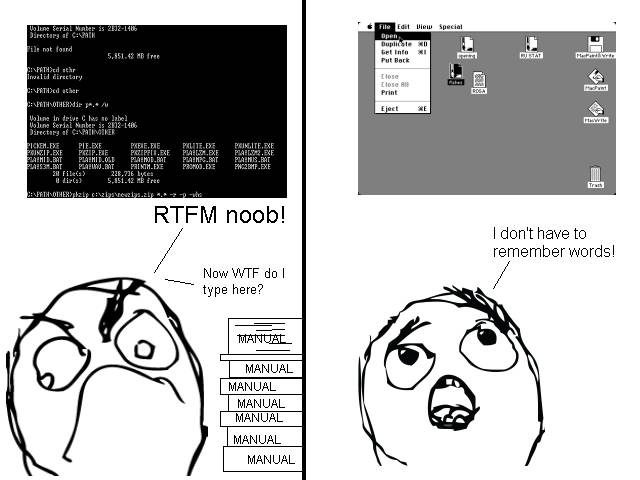

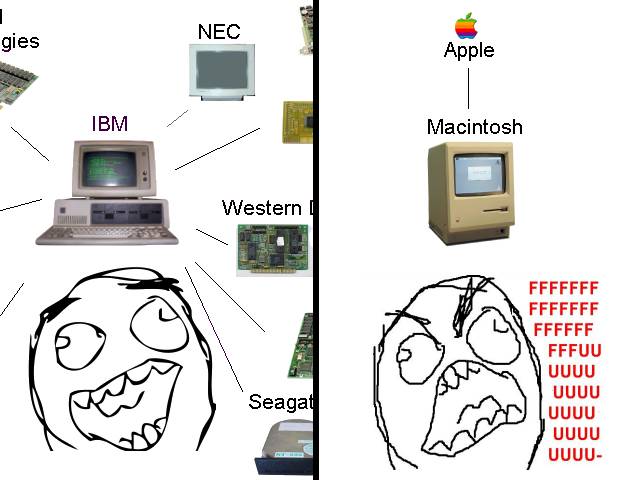

The IBM PC was not tied to a specific operating system. IBM supported DOS, but over the years almost every OS was ported to the IBM PC architecture at some point. There were a number of drawbacks to the IBM platform. Because the IBM PC was primarily a business platform, it was often behind in various areas. For example during the 80s and early 90s there was little stopping vendors from producing a "multimedia" video card for gaming (involving things like multilayer scrolling backgrounds), yet no one did. Meanwhile, platforms like the Amiga prospered because they included such features. There was a certain fear that innovative third party devices would be left in the dust whenever IBM came out with its own lackluster version. This also somewhat hampered innovation. Even though it could address a whopping one megabyte of memory, the Intel 8088/8086 instruction set was considered backwards and lacking until the 386. The ISA bus had limited speed, and IBM was unwilling to reasonably license their later Microchannel bus. VESA Local Bus filled the gap until PCI came about. Because there was so much variation in third party products, various products were not always fully compatible with one another. But this was usually a risk people were willing to take for less expensive or more powerful hardware. The IBM PC was designed to make porting from other existing platforms relatively easy. This enabled it to have a large software base early on. However, IBM did not set any real software design guidelines. DOS had no multitasking, no real resource management, and no standard user interface. DOS programs often did not play nice with each other and operated inconsistently. They often inherited oddities and archaisms from their original platforms. In the end, most of the desired functionality eventually came to IBM PC compatibles, and other computer vendors could not keep up with inexpensive IBM PC clones. The only competitor to really survive was the Apple Macintosh. And the modern Macintosh is essentially a crippled PC clone - although most of what passes for a "PC" these days is hardly IBM PC compatible any more. What made the Apple Macintosh insanely great: Needless to say, the Apple Macintosh revolutionized the way people though about computers. When the Macintosh was first introduced in 1984 most computers were

operated through a command line or text-mode interface with inconstant,

undiscoverable functionality.

Graphical systems like the Xerox Star, Visi On, or Apple's own Lisa were targeted at business users, and required much beefier, more expensive hardware. The Apple Macintosh was designed to bring computing to masses using a "user friendly" approach. Instead of remembering dozens of function keys, you could select what option you wanted to use from a menu at the top of the screen. And this menu was present and similar for all applications. Instead of digging through a manual and typing obscure commands at a command prompt, your files and folders appear as graphical icons on the screen. Common file management commands are all automated with drag and drop or selectable through the menu. Instead of exporting data from one application, and then manually importing it in to another, you can just "copy" data to a clipboard and "paste" it in to another application. Instead of remembering a dozen different ways to do the same thing in different applications, there is usually one standard way. The OS itself provides standard menus, windowing controls, dialog controls, and more. (Now, if only Microsoft Windows 8 would implement some of that!!) The original Macintosh operating system intentionally omitted any kind of command line interpreter. Although scripting languages later became available, this forced Macintosh developers to create complete GUIs for their software. You couldn't half-ass it and tell your users to start the program by typing "progname -f -uuu -obscurecrap:10 -gibberish --RTFM" Another significant change included the separation of user interface data from the program itself. Dialog boxes, text strings, icons, and menus were stored in separate resources. You could use a resource editor to translate a program to another language without ever having to even get near source code! Because the graphical display and printing facilities were standard in all models, it became an ideal platform for desktop publishing and graphics processing. IBM PC software always had to deal with the possibility that the user might only have a monotext or low-resolution CGA video card. The Apple Macintosh hardware itself was also rather unique. Apple designed the case of the integrated CPU and monitor so that you, the user, could not easily open it. The idea was that you should never have to worry about the hardware inside. Instead you were to take the computer in to an "authorized Apple dealer" for service or upgrading. Although it was intended for use on a desktop, it was small and light enough you could pack it up and take it places. It was probably lighter than many "luggables" of the time. It was among the first computers to use the Sony 3.5" floppy drive. There is an interesting story there about how the Macintosh was going to use a 5.25" "twiggy" drive but they were too unreliable and changed out at the last minute. Like the Lisa, It used a "mouse" as a pointing device. The Macintosh graphical desktop environment was designed so you could perform common operations just by "pointing" at something on the screen and "clicking" the mouse button. In 1984 when the Macintosh was introduced, others were experimenting with other non-keyboard input such as light pens, joysticks, or yes, even touch screens. The mouse proved itself as the winner because it was a simple, inexpensive, replaceable device that a user could use with their arm at rest on a desk, and without dirtying up or wearing out their display. Initially, there were a number of downsides to the Macintosh: Early on, the Macintosh lacked software. Existing CP/M, DOS, or Unix software had to be significantly re-designed for use under a graphical user interface. The original Macintosh was significantly under powered. Even though it used the powerful Motorola 68000 CPU, it came with only 128k of RAM. The only supported way to upgrade it was to take it back to an Authorized Apple Dealer to replace the entire motherboard with a later 512k model. No, you couldn't just throw a few bucks at it and get 192k until you were ready for a larger upgrade, it was all or nothing. Because applications used a graphical user interface, this meant they needed more RAM than other systems for pushing graphics around. It also meant the user interface of some applications might run slower than their text-mode counterparts. Because of these factors, many viewed the early Apple Macintosh as an overpriced toy. Integrated displays are a maintenance risk. The Macintosh was not the only microcomputer to integrate a display monitor. However, computer displays were, and still are, a frequent point of failure. A failure in an integrated display means you are out of business until you have it repaired. With an external monitor you can be back in business almost instantly if you happen to have a spare. An integrated display also make it practically impossible for you to upgrade to a newer/better/bigger display. What Steve Jobs had really tried to create was a computing appliance that was entirely under Apple's control. On the one hand, it created a shift in the way people though about separating technical details and hardware from the end user. It was no longer fashionable to make users worry about DIP switches or jumper settings. On the other hand, in practice, people were using the Macintosh as a personal computer and often required custom expandability.

Despite the lack of expandability and upgradability, many third parties developed their own custom upgrades and add ons. But the methods usually involved dirty "hacks", such as desoldering existing chips and mounting additional circuit boards to expand the RAM, or using an inverted socket to piggyback the 68000 chip to attach a SCSI controller. Some later models of the Apple Macintosh bowed to consumer demand and provided internal general-purpose expansion slots and user accessible RAM. Unlike the IBM PC DOS, Apple had exclusive license over their Macintosh operating system. That, plus the larger difficulty of implementing a compatible ROM, effectively prevented third parties from creating Macintosh clones. The few clones that did exist had to have a license from Apple, and were entirely at the mercy of Apple until Apple pulled the plug, putting them out of business. Despite the negative aspects, people wanted the "simple" Mac, and the Mac gained popularity. IBM PCs didn't even start to standardize on Microsoft Windows until the early 90s, and a truly similar desktop interface was not even available until 1995! What made Linux great.

So one day this Finnish fellow says he has put together a tiny Unix-like clone kernel and he is giving it away Free... Unix was an older multi-user operating system, designed for use by programmers and other computing professionals. It provided advanced features such as user security and on CPUs that supported it, memory protection and virtual memory. It generally had very high system requirements that made it impractical on a desktop computer. It was primarily used in large shared multi-user office minicomputers/servers. Numerous vendors packaged their own Unix based operating systems for use in corporate "enterprise", and often charged obscene amounts of money. Linux cloned the Unix-style environment, providing many of the same functional advantages and disadvantages. The great thing about Linux was that it was completely Open Source. That is, you could download, modify, and redistribute the source and compiled software with no licensing fees and virtually no restrictions. Also, since it was free from any central corporate ownership, there was no risk that it might disappear when a company went out of business (Like BeOS). Furthermore, the use of open source reduced the risk that development might go down some undesirable path. In practice, if a project is popular enough, a "fork" will quickly pop up and the undesirable version will die off. Linux was highly portable. This means you were not necessarily tied to a specific computer architecture, giving you more choice. Use of Linux spread throughout educational circles. As it grew, it became robust enough to replace many proprietary Unix installations, but saw little adoption on the desktop. Unfortunately, Linux inherited many technical archaisms that never got purged. For example there is the hideous "/dev" folder that you, the user, can browse in to. It contains messy technical internals of the system that you should never, ever, ever, EVER, see. This folder should not be a physical part of your file system, or at least hidden. References to "teletypes", prefixing filenames with periods to "hide" them, mult-user permissions on single-user systems, one single file system rather than organizing things in to drives, file name case sensitivity, the list of archaisms just goes on and on and on and on.

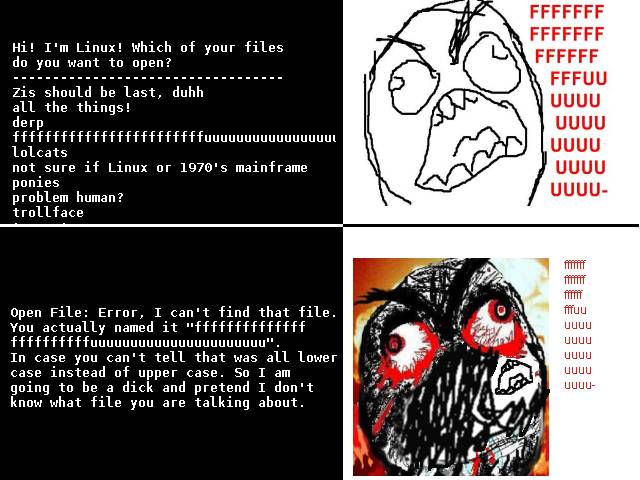

There was no standard GUI. Unix and Linux were simply not designed for desktop computing. They were designed to run powerful, unseen, backend software. System tools were script-oriented and did not factor well in to a graphical environment. Commonly these ran without a GUI. A common, hilarious, excuse for not running a GUI on Linux was "it takes too much RAM". The Mac did its thing in 128K, and even Windows 95 could run in as little as 4 megabytes. That wasn't the issue. The issue was that the Unix and Linux toolsets just didn't have any advantage running in a GUI. Had Unix started out with an integrated Mac-like GUI, it would have been necessary to invent a command line. Over the years, a huge number of GUI "tool kits" popped up. This often meant that different application running the same computer would have a radically different "look and feel". And sometimes such applications would fail to interoperate with each other. Many of the different parts of Linux did not "integrate" well. In the early days you had to run a whole series of commands just to access the files on a damn floppy disk. You could almost hear some kernel developer crying that the drive didn't let them know when the disk was inserted or ejected, and some security guru who thinks only privileged administrators should "mount" file systems Anyway, Linux became popular in servers, embedded devices, and smart phones. The "end" of personal computing?

Big companies were happy to oblige for a monthly fee and additional charges per megabyte. Most of these devices were locked down and served only to display content. Congratulations idiots, you just took a dump on more than 30 years of progress. |